2 Cans & a Cloud

At MSquared Fusion we like to have fun. Every now and then we pick up some random thing to do just for a laugh. We even have a side music project! As the holidays are a slower time for us, M2 decided to do a technology project merging multiple aspects of the work we have done in our careers and what we do now. Sort of a way to be hipster into vintage stuff, while implementing newer technologies. Hence the task of building our retro M2Fusion cluster!

The concept

The main idea for us was to grab one of the best designs from Apple we loved and seem forgotten, the 2013 MacPro cans (or subwoofers as we like to call them). In our opinion that model was sleek, original, and efficient running Intel Xeons, which were revered at the time. One can turned into two as we found them online for purchase. Now, to play some loud heavy metal vinyls and go to work on what to do with them!

We knew we wanted them together and running as much as we could inside them. Our plan included the following:

- A HashiCorp stack! It needed to run one of our favorite schedulers, Nomad, and Consul for service discovery.

- Kubernetes cluster. Ya know, stay in touch with the latest.

- Amazon Web Services (AWS)! Yes, we wanted the cans to run our internal cloud. We wanted this so we could code and test locally before sending our work to the real thing for clients.

- Progress Chef. M2 wanted to revisit the glory days of our configuration management kitchen mad skillz, yes…with a ‘z’.

- Internal Docker, Inc registry to not be embarrassed by posting broken containers out there.

The hardware implementation!

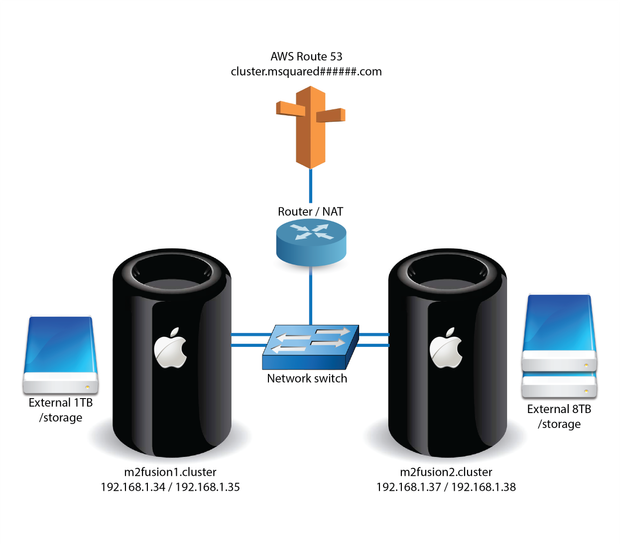

We have been doing cloud for so long that we really forgot actual hardware! Uff, we had to travel back in time as M2 has never been one to have home “labs”. Side note: do folks that have labs at home wear white coats and handle things like pipettes? Just wondering. As we traveled back in time to actual items we could touch, we came up with this simple architecture:

The hardware and network diagram is self-explanatory except for a couple of twists, we added retro Firewire drives for a total of 8TBs for persistent storage and a Route53 record from one of our domains in AWS for external access.

The other layers

To accomplish this, we couldn’t run the MacOS on the cans, so we went to our favorite #Linux distro, Canonical Ubuntu. We replaced the OS with minimal server installs to keep things light. Plus, they were going to be headless anyway.

Our next step was to start overlaying what the systems should run for our development and deployments. For that we used Chef as we miss it a lot. Being serverless, we don’t really see it anymore.

We won’t get into how to install open-source Chef or Chef Workstation but we created our organization, did all the magic knife stuff and started coding recipes for Golang, NodeJS Developer, Python Coding, and what we needed for our cluster platforms. M2 even coded a recipe for Rancher by SUSE, which we love!

Just as a small sample of our cooking, our quick way to do Consul on docker:

# # Cookbook:: consul_server

# Recipe:: default # require 'ohai' oh = Ohai::System.new oh.all_plugins(['network']) nic_name = 'enp12s0' # We had to do this for first Apple NIC ip_address = oh[:network][:interfaces][nic_name][:addresses].find { |_, info| info[:family] == 'inet' }&.first if ip_address.nil? Chef::Log.warn("IP address not found for interface #{nic_name}") else Chef::Log.info("IP address of #{nic_name}: #{ip_address}") end # get image docker_image 'hashicorp/consul' do tag 'latest' action :pull end docker_volume 'consul' do action :create end # run container! docker_container 'consul' do repo 'hashicorp/consul' volumes 'consul:/consul' port ['8500:8500','8600:8600/udp'] command 'consul agent -data-dir /consul -server -ui -node=server-1 -bootstrap-expect=1 -client=0.0.0.0' env ["SERVICE_NAME=ConsulServer”] restart_policy ‘unless-stopped’ end

For your cooking needs, just read here.

(We know, long post but rarely anyone comes back for part 2 or 3 so it continues)

We deployed everything that we needed and were ready to deploy some workloads into Kubernetes and Nomad. However, we wanted the Mac cans to look like more nodes. For this, we implemented Multipass. Multipass allows you to quickly and smoothly launch Ubuntu VMs even with cloud-init files that allowed us to attach to our clusters becoming effective workers in Nomad and Kubernetes.

Our AWS

Now we had Nomad, Consul, Kubernetes running:# CONSUL STUFF $ consul catalog services consul docker-registry mysql-server nginx nomad-client nomad-server $ consul catalog nodes -service=docker-registry Node ID Address DC server-1 e7ee2c41 172.17.0.2 dc1 # NOMAD STUFF $ nomad job status docker-registry ID = docker-registry Name = docker-registry Submit Date = 2024-01-05T14:03:18Z Type = service Priority = 50 Datacenters = dc1 Namespace = default Status = running # KUBE STUFF $ kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.152.183.1 <none> 443/TCP 9d nginx NodePort 10.152.183.188 <none> 80:31978/TCP

At this point, we can deploy whatever we want! Now, for what we really wanted…our own AWS published by an endpoint to develop and test stuff before hitting your clouds. For this we used LocalStack. We deployed it into Kubernetes and was flawless. So, seriously, we did all of this work to be able to:# create bucket $ awslocal s3api create-bucket –bucket mybucket –region us-east-1 –endpoint-url=http://m2fusion2.cluster:31566 { “Location”: “/mybucket” } # list buckets awslocal s3 ls –endpoint-url=http://m2fusion2.cluster:31566 2023-12-30 15:31:09 m2fusion 2024-01-06 16:23:27 mybucket # copy file from local to our bucket $ awslocal s3 cp record.json s3://mybucket/ –endpoint-url=http://m2fusion2.cluster:31566 upload: ./record.json to s3://mybucket/record.json # verify is there m2fusion@m2fusion1:~$ awslocal s3 ls mybucket/ –endpoint-url=http://m2fusion2.cluster:31566 2024-01-06 16:25:23 250 record.json # Create dynamoDB table $ awslocal dynamodb create-table \ –table-name mcapture \ –key-schema AttributeName=id,KeyType=HASH \ –attribute-definitions AttributeName=id,AttributeType=S \ –billing-mode PAY_PER_REQUEST \ –region us-east-1 –endpoint-url=http://m2fusion2.cluster:3156 # Verify $ awslocal dynamodb list-tables –region us-east-1 –endpoint-url=http://m2fusion2.cluster:31566 { “TableNames”: [ “mcapture” ] }

Conclusion

This was a super fun exercise for M2 Fusion. Also a practical one, as we are now able to host our own cloud and develop our upcoming products in an isolated environment at a very low cost while staying retro hipster. Can this run in a single server? Sure…but what is the fun of that when we can use some of the sleekest machines ever designed and stay true to our punk philosophy.

For more details on this fun project, send us a line!

Thank you for reading this super long post.

#cloudinfrastructure#cloudengineering#devops#hashicorp#terraform#softwareintegration#mediaentertainment